Jobs

Jobs are processing tasks that you can execute directly through the Web app without starting an interactive Session. They are particularly useful for reproducible workflows that do not require manual interaction via a graphical user interface (GUI), and they can furthermore run uninterrupted for longer (up to 72 h) than a Session.

Job Templates

Each job is defined by a Job Template, which consists of a bash script containing the necessary processing commands and an associated Environment that includes all the required software tools. You can conveniently configure environment variables as input parameters, allowing the same job script to run with different settings and inputs.

Creating a Job Template

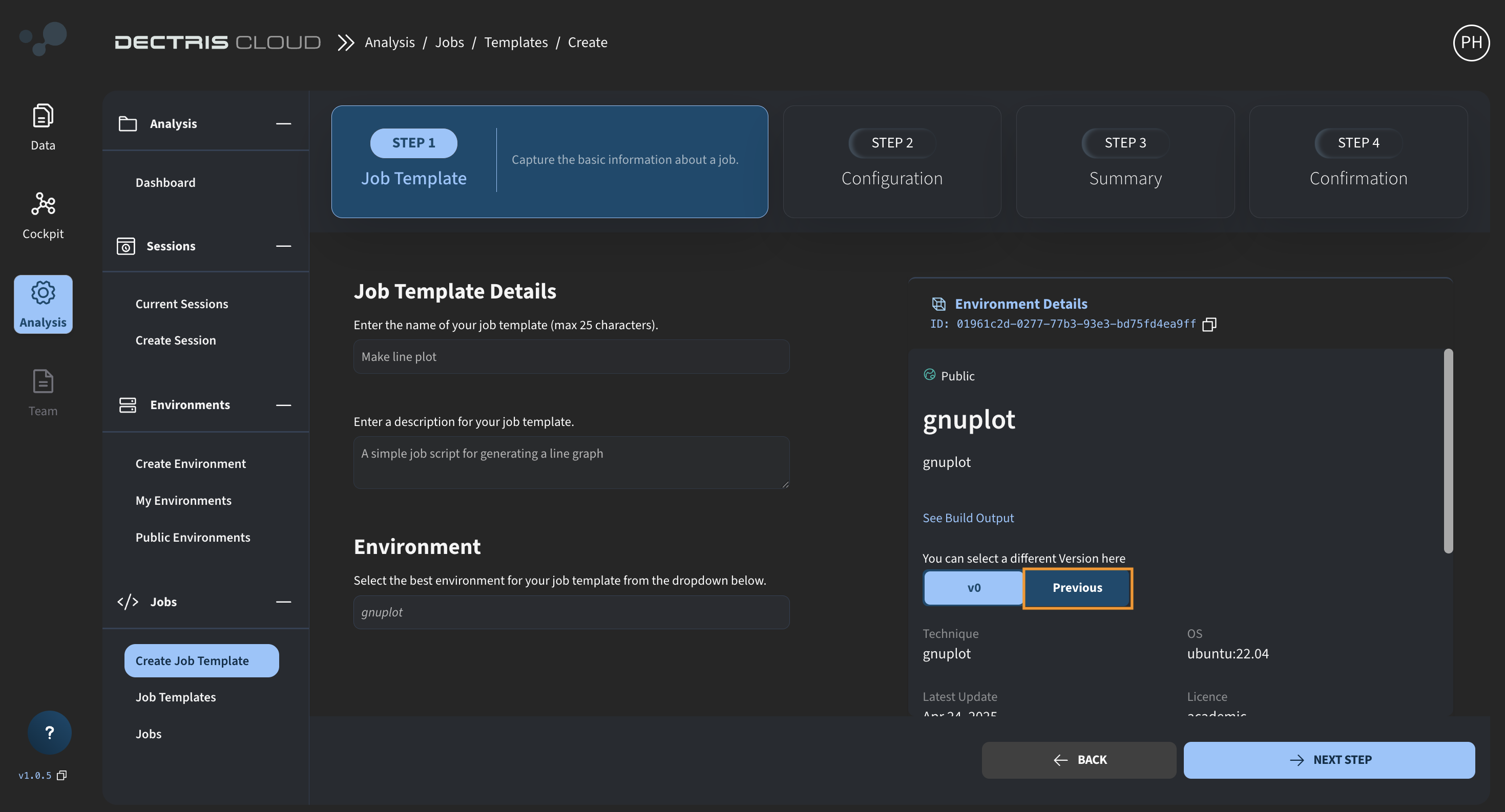

Start creating a Job Template by navigating to Analysis → Jobs → Create Job Template:

Here you can specify the initial details of the Job Template, such as its name, a description and the environment which it should run with. Note that if your environment has several versions, you can click on the Previous tab (marked with orange box) to choose the environment version you want to use.

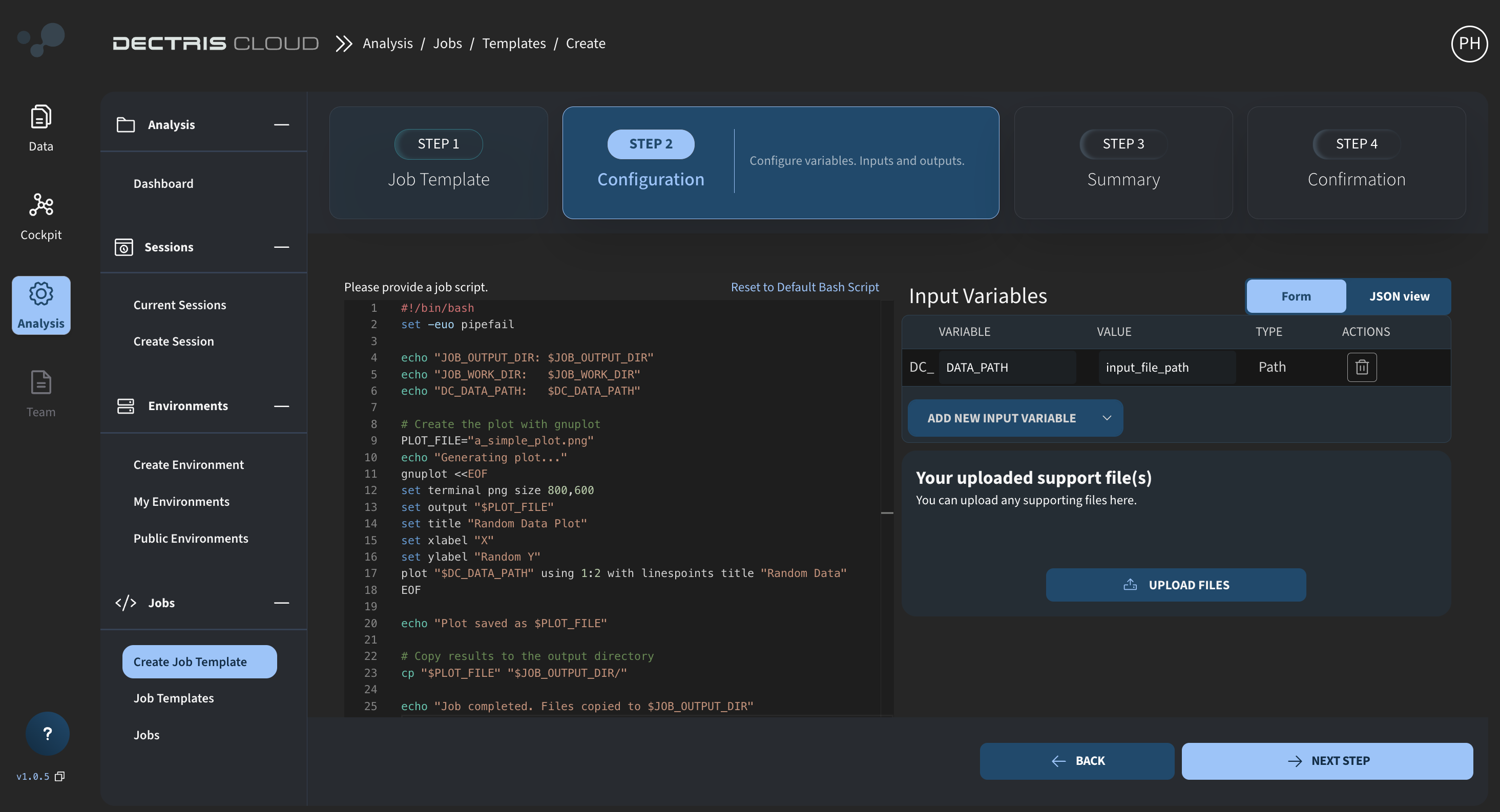

Clicking on NEXT STEP, brings you to the next stage of the creation process, where you can define the analysis bash script:

Within the bash script, you can specify the exact commands to run the Job. Make note of the following predefined environment variables:

- JOB_WORK_DIR :: Contains the path to the default /work directory for the job.

- JOB_OUTPUT_DIR :: Contains the path to the output directory. Files copied to the output directory will appear in the output overview in the Jobs Overview Table and will in addition also be accessible in the 'Processed' folder of the experiment

- JOB_TEMPLATE_DIR :: Contains the path to the directory in which the support files are uploaded. Files in this directory are not writeable, but can be copied to the working directory.

In addition, you can define your own environment variables as configurable Input Variables. Each Input Variable has a hardcoded DC_ prefix, which must be included when referring to the variable in the bash script. The value given for each Input Variable is a default value, which can be overwritten when running the job.

Note also that Input Variables with the type "Path" (as defined above) are special, because they can can point to a data destination within an experiment or project.

Finally support files such as .py files can be uploaded and accessed via the JOB_TEMPLATE_DIR directory.

Managing Job Scripts

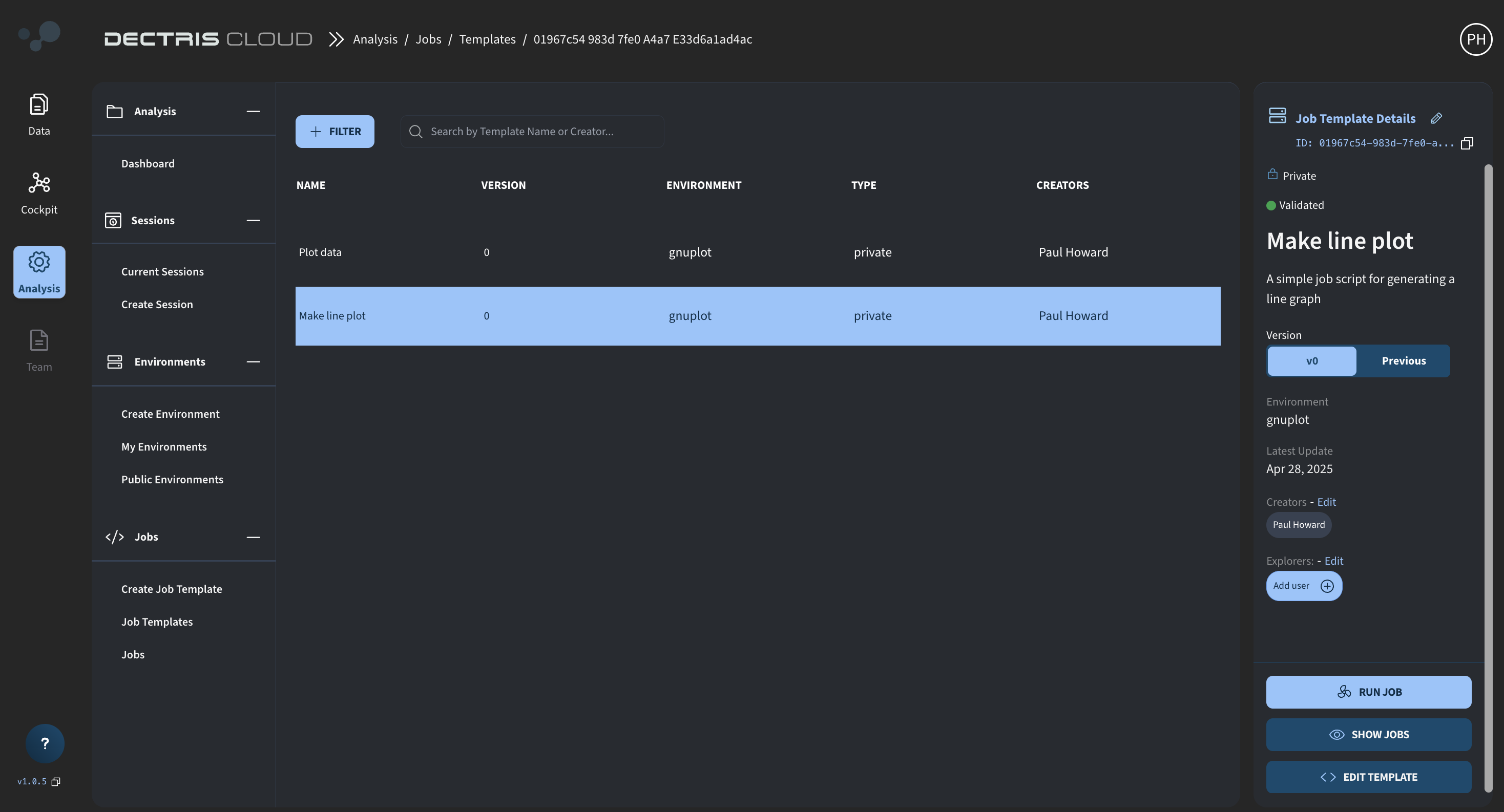

Saved Job Templates can be viewed in the table at Analysis → Jobs → Job Templates:

By clicking on your Job Template in this table, you can access the Job Template Card. Similar to Environments, you can assign Creators, who can edit the template and run Jobs, and Explorers, who can only run Jobs. Each newly created Job Template initially has an "unvalidated" status, which updates to "validated" after the first successful Job run. The EDIT TEMPLATE button allows you to create new versions of the template, while the RUN JOB button initiates the execution of the Job.

Running a Job

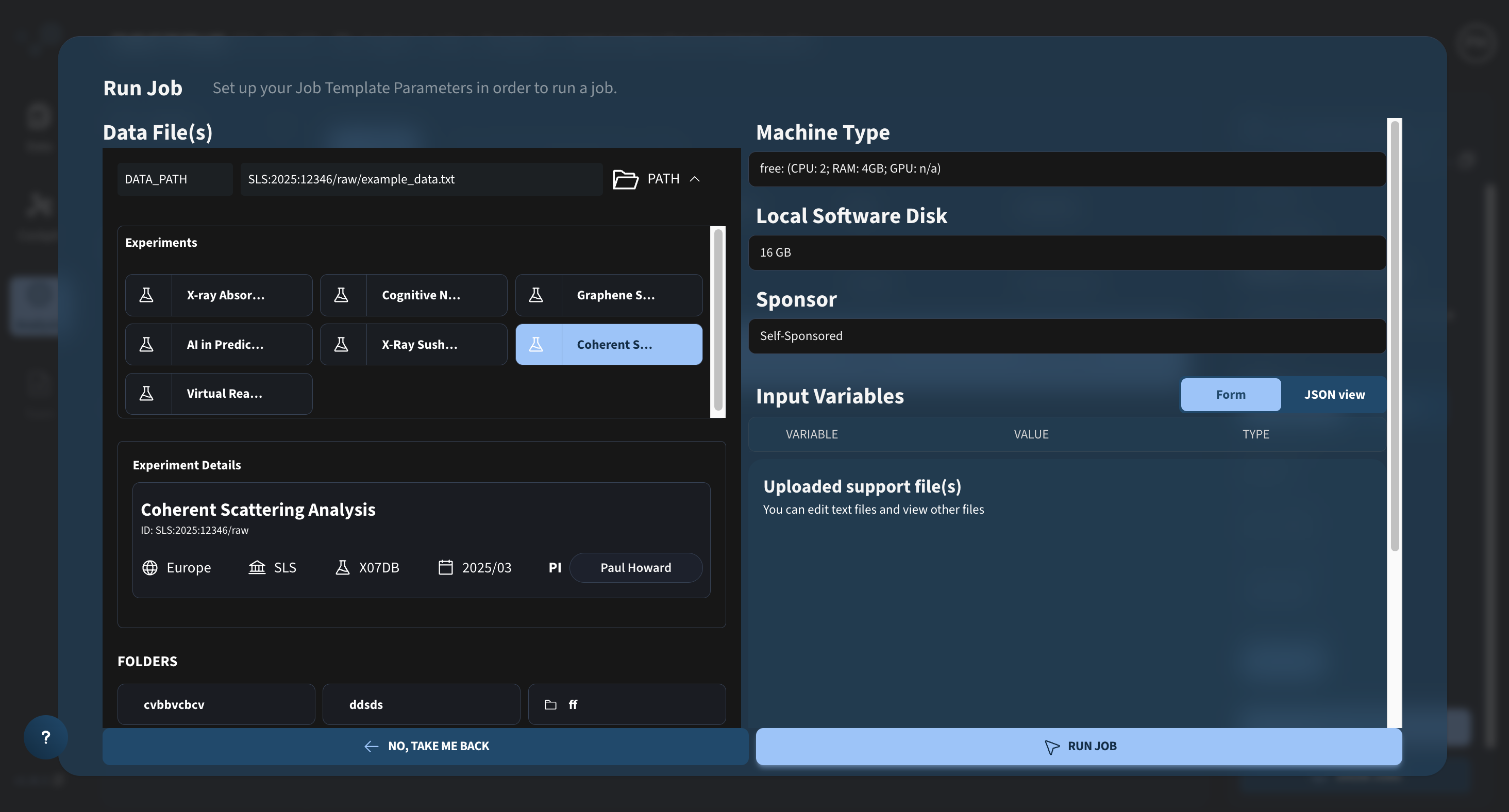

When you click RUN JOB, a job configuration window opens, allowing you to set and customize the input parameters before submitting the job for execution. The left part of the window contains a wizard for configuring the path to your data. Select your experiment and navigate through the folders to choose the data the Job should run with. This data path is automatically assigned to the DC_DATA_PATH Input Variable defined during the Job Template creation process:

As with Sessions, a Job has a number of additional different configuration options:

- Machine Type :: The amount of CPUs, amount of RAM, and GPU type (if applicable) which should be used by the Job.

- Local Software Disk :: The disk size allocated for the local work directory while the Job is running (NOT data storage space or Environment storage space). Should be chosen to accommodate the temporary and output files generated during the job run.

- Sponsor :: Choose who the consumed resources should be credited to

- Input Variables :: Extra input variables that can be configured every time a Job is run, as defined by the job template. This could be threshold parameters, job hyperparameters, etc.

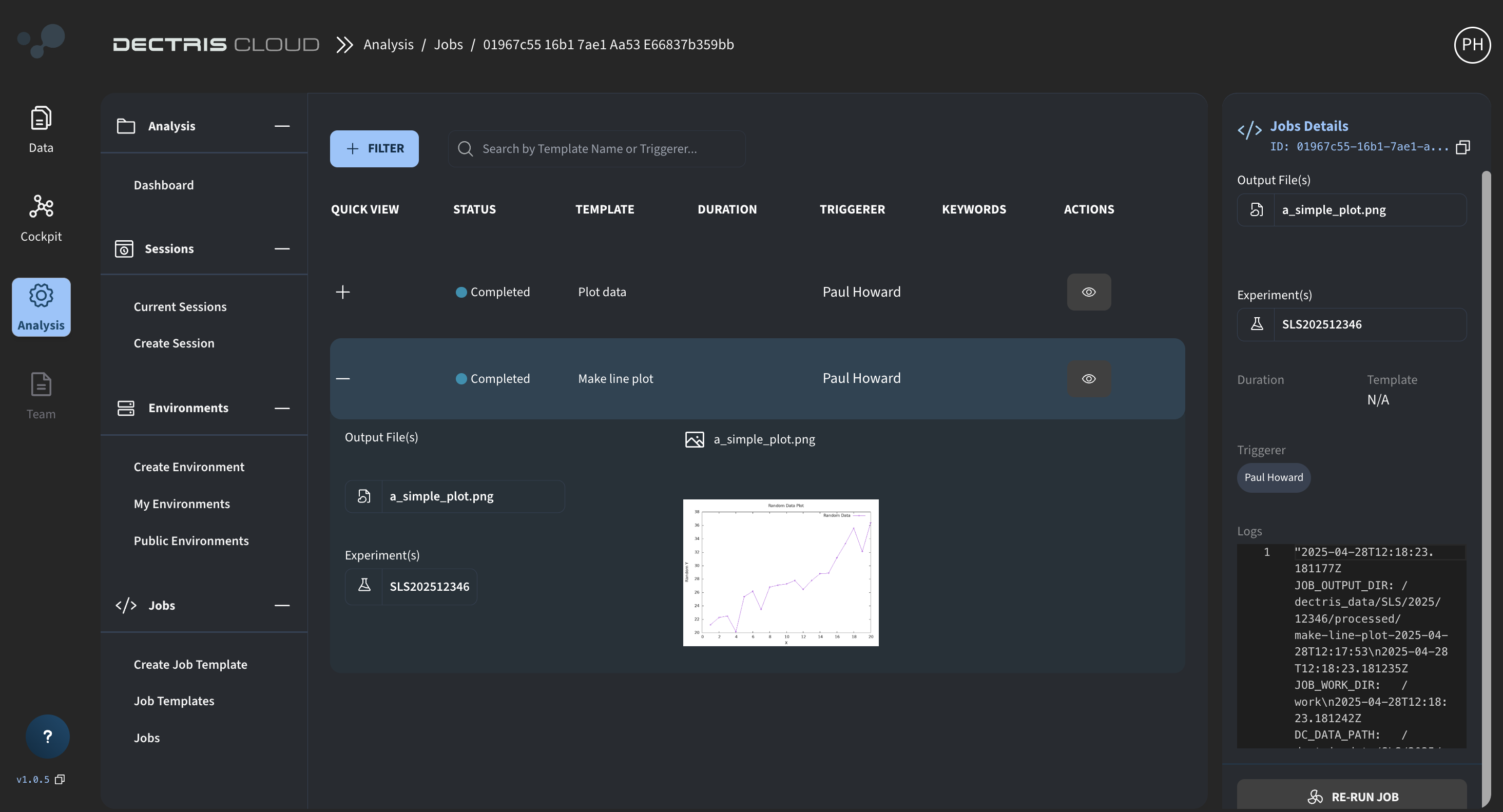

Once a Job has been started, it can be viewed in the Jobs Overview Table, accessed through Analysis → Jobs → Jobs:

Job status

Jobs in the Jobs Table can have the following status:

- Pending :: The compute node requested for the job is currently being allocated and is spinning up. Consumption (CPUhs and GPUhs) is not tracked for the user or sponsor in this state.

- Running :: The job is being executed. Consumed CPUhs and CPUhs are tracked and credited to the sponsor.

- Completed :: The job has finished and the results should be viewable in the Jobs Table as well as in the Processing folder of the experiment.

- Failed :: An error occurred that prevented the job from finishing normally.

Job output

Once a Job has completed, the resulting output can be viewed in the Jobs Table by clicking on the "+" symbol to expand the view:

Further details, such as the Job ID and log file can be viewed by clicking the eye symbol in the table view.